In a Nutshell

The Cloud for AI infrastructure guarantees data sovereignty and ensures compliance with the EU AI Act by embedding advanced security mechanisms into every layer of the platform. The solution effectively mitigates emerging threats, such as prompt injection, jailbreaking, and training data leaks, by leveraging tools like the NeuralTrust AI Firewall for anomaly detection and a Federated Vector Store for decentralized data architecture. The innovation deployment process is further supported by tools that automate compliance and ethics: Credo AI (regulatory reporting), the AI Verify Toolkit (model fairness testing), and the Responsible AI Toolbox, which introduces the essential human-in-the-loop layer. This enables organizations to seamlessly adapt to evolving regulations while maintaining the highest security standards within a secure cloud environment.

In our previous article, we explored the landscape of AI tools available in the public cloud: Microsoft Azure, Google Cloud, and our own OChK Stack—a platform designed for organizations prioritizing tech sovereignty.

From this part, you’ll learn:

- what today’s AI threats look like (and why they’re so different),

- how to design an AI architecture that keeps your projects secure and compliant,

- what Cloud for AI is, and the thinking behind how we built it.

This is part two of a blog series on building AI in the cloud. To catch up, read: Where to Build AI Solutions? From Global Giants to Local Leaders. Cloud for AI – Part 1 and follow us on LinkedIn to stay updated.

Cloud for AI: Sovereign Infrastructure for Modern AI

Today’s organizations face an unprecedented challenge—trying to reconcile fire and water. How to build AI infrastructure that stands up to new, often unpredictable threats, while staying compliant with tightening regulations, maintaining full data control, delivering top-tier performance, and keeping enough flexibility to adapt as you grow?

To complicate matters further, the entire ecosystem is evolving at breakneck speed. New model versions, inference engines, and library updates often roll out in weeks—sometimes days.

Cloud for AI, a solution built by our experts based on OChK Stack, was designed as the answer to this challenge. It fundamentally redefines how organizations approach deploying and managing artificial intelligence systems.

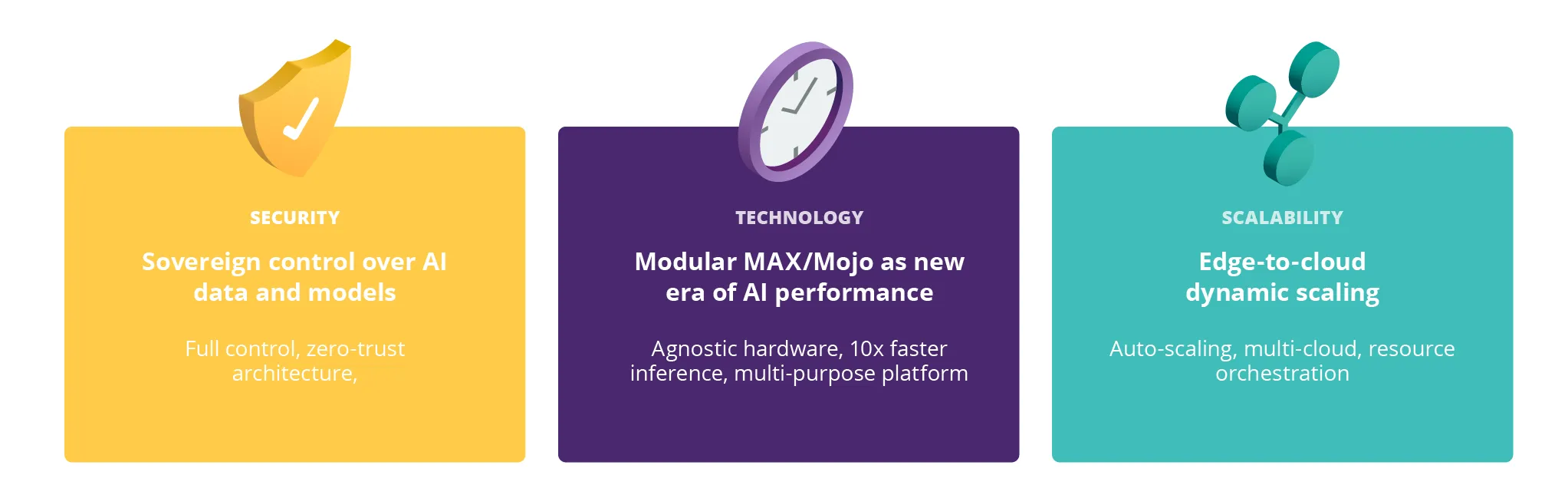

Fig. 1: Key Values of Cloud for AI

Cloud for AI lets you scale your solutions seamlessly—from single workstations to on-prem environments all the way to the public cloud. In this context, scaling doesn’t just mean ramping up resources on demand. It’s also about making strategic decisions around where your data and models live—including hybrid scenarios where components are distributed across on-prem infrastructure and cloud platforms.

This architecture is purpose-built to support projects ranging from small teams running a single GPU to sprawling multi-node environments with hundreds of GPUs—all without requiring you to touch a single line of your application code.

One of our foundational design principles was to minimize vendor lock-in by embracing an open technology ecosystem. Another was modularity—enabling independent development of components and the freedom to choose technologies that best fit your needs.

Cloud for AI builds on years of lessons learned by OChK. What you’re reading isn’t the whole blueprint—more like a starter kit to help you understand how to design AI infrastructure that’s secure and regulation-ready.

A New Threat Landscape for AI Systems

Large Language Models (LLMs) have radically transformed the way we handle data and automate business processes. Models like GPT-4, Claude, and Llama can produce text that’s nearly indistinguishable from human writing, analyze documents with unprecedented precision, and engage in sophisticated conversations.

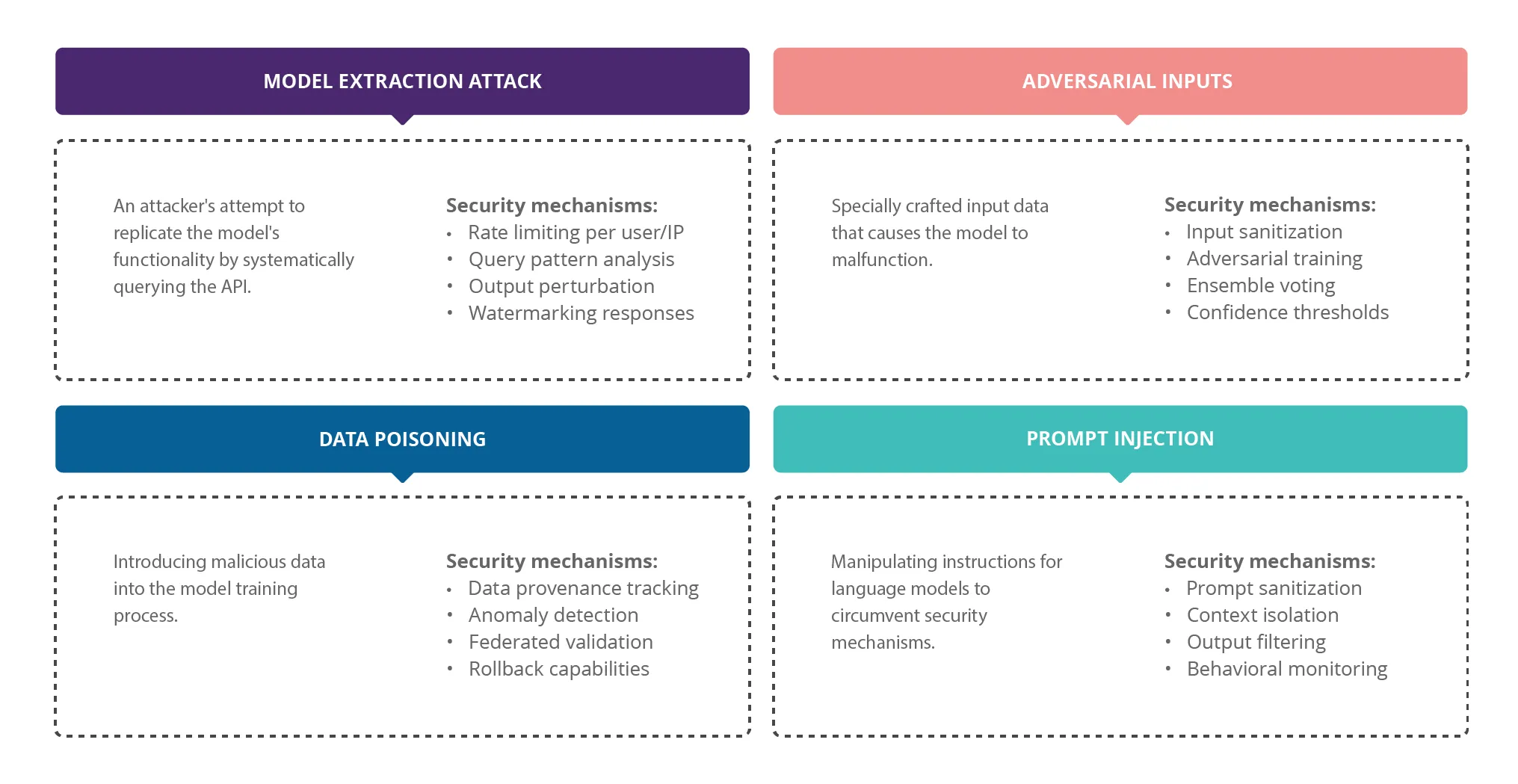

But the rise of AI hasn’t come without a dark side. Along with all that potential, entirely new categories of threats have emerged—threats whose scale and sophistication dwarf anything we’ve seen in traditional cybersecurity. What makes them especially dangerous is that they exploit the very abilities that make AI so powerful: to learn, adapt, and generalize. Here are just a few examples:

- Prompt Injection. Arguably the most critical risk today, where attackers embed specially crafted text into documents or prompts, causing the model to behave in ways that override its original instructions. It’s similar to hacking a system—not through code exploits, but by manipulating how the model understands and processes language.

- Jailbreaking. A technique where an attacker uses cleverly phrased prompts to bypass model safeguards. For example, asking the model to write a fictional story about a hacker can trick it into revealing real-world hacking techniques—thinking it’s just spinning a yarn.

- Training Data Leakage. A risk where language models memorize portions of their training data. With carefully engineered prompts, attackers can trick a model into revealing confidential information that was unintentionally included in the training set.

Fig. 2: New Types of Security Risks in the AI Era

Example: How to Clone a Closed Language Model Step by Step

1. API Reconnaissance and Fingerprinting

Public docs and developer forums often disclose which endpoints return raw token streams versus full logprobs (probabilities for each token). This makes it possible to craft a precise query plan and calculate operational costs by multiplying tokens processed by the per-token price.

2. Mass Key Harvesting

Hundreds of developer accounts, created using stolen or purchased identities, siphon off free credit pools. When needed, attackers buy extra quotas on gray markets or leverage gift cards, keeping traffic under the radar.

3. Prompt Factory

A pipeline running a cheaper open-source model generates millions of diverse prompts (translations, code, instructions, medical advice, literary text). Each prompt is sent to the teacher model (e.g., GPT-4) with a temperature just shy of hallucination to maximize output variety.

4. Knowledge Exfiltration

Over a few weeks, attackers amass a dataset containing tens of billions of tokens and capture top-k logits—critical for recreating the teacher model’s probability distributions. To avoid detection and throttling, requests are spread across hundreds of IP addresses and low-traffic time windows (like nighttime hours).

5. Distillation (Student Training)

Gathering fewer than 6 billion tokens and fine-tuning an open model (e.g., Yi-34B) on a 256×H800 cluster costs around $6 million—roughly 1% of the budget to train such a model from scratch. Logit matching is used to align the student model’s raw outputs with those of the teacher.

6. Validation and Covering Tracks

Results are benchmarked offline. If divergence exceeds 8%, a new round of prompts is run only in underperforming areas (active error-seeding). To mask the stylistic fingerprint, attackers mix in around 15% open-source model responses.

Sounds like a cyber-thriller or a Black Hat keynote? Unfortunately, it’s all too real. According to OpenAI and fragmented public disclosures, this is how China’s DeepSeek reportedly built its R1 model—by distilling knowledge from OpenAI systems. It’s a prime example that stealing a brain no longer requires breaking into a data center—just a credit card, some OSINT, and a solid prompt script.

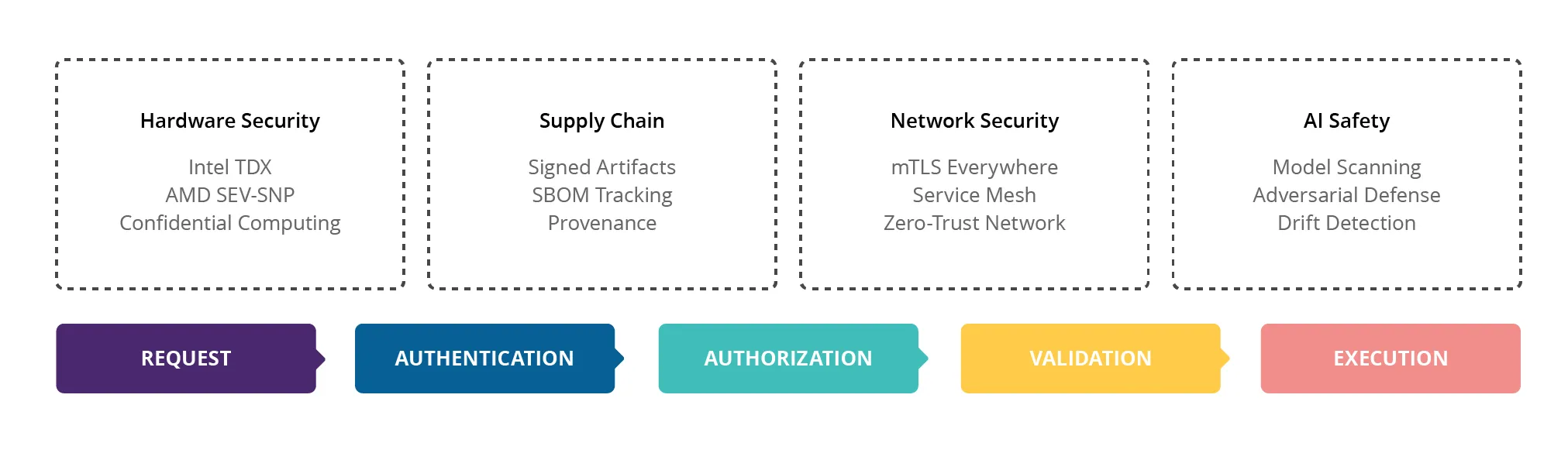

Cloud for AI Security Architecture

Cloud for AI bakes security into every layer of the stack. This isn’t an add-on or afterthought—it’s a foundational pillar of the platform.

Fig. 3: Multi-Layered Zero-Trust Model

The NeuralTrust AI Firewall acts as a gatekeeper, inspecting every incoming request. Using advanced anomaly detection, it can identify prompt injection or jailbreaking attempts. Each request is classified into three categories:

- Allow – Safe to forward to the model,

- Transform – Needs modification before processing (e.g., stripping potentially malicious fragments),

- Block – Clearly malicious and rejected outright.

Classification follows the OWASP GenAI Top-10—a dedicated security framework for generative AI systems. Every decision is logged for auditability, supporting full compliance with NIS2 incident reporting.

Meanwhile, the Federated Vector Store addresses data security by design. Rather than storing everything in one juicy target for attackers, the platform relies on a distributed architecture. Each tenant has an isolated vector database instance. Data never co-mingles, and cross-tenant access is structurally impossible.

Meeting AI Act and GDPR Requirements

The EU AI Act categorizes AI systems by four risk levels: unacceptable, high, limited, and minimal. Most business use cases fall into the high or limited risk categories. This means organizations need to re-architect their systems to comply with a long list of obligations, including:

- implementing or updating risk management processes specific to AI systems,

- managing data governance, including transparent training dataset descriptions and validation processes,

- preparing and maintaining detailed technical documentation covering algorithm specs,

- recording and monitoring events throughout the AI system lifecycle.

Cloud for AI was built from the ground up to enable full AI Act compliance. Even better, it allows for easy adaptation to future regulations, treating regulatory compliance not as a one-off milestone but as an ongoing process. Here are just a few tools we leverage:

- Credo AI automatically generates compliance reports in SARIF (Static Analysis Results Interchange Format), a globally recognized standard used by auditors.

- AI Verify Toolkit runs automated fairness and ethics tests. For any high-risk model, the system automatically:

- checks for bias across demographic groups,

- verifies the model doesn’t discriminate on protected attributes,

- evaluates stability and predictability,

- outputs reports formatted to meet EU AI Act requirements.

- Responsible AI Toolbox – by Microsoft adds a HITL (Human-in-the-Loop) layer for critical decisions, routing unusual or high-risk outputs for manual review to ensure oversight.

Fig. 4: AI Act-Ready: Compliance as a Competitive Edge

Under GDPR, users have the right to erase their data. In AI systems, this is especially challenging—models can remember training inputs. Cloud for AI tackles this with tools such as:

- LakeFS – A data versioning system working like Git for data. Every dataset change is tracked, and deleting a user’s data triggers a new dataset version without those records.

- DVC (Data Version Control) complements LakeFS by versioning derived features. When a deletion request comes in, the system can revert to a pre-deletion state and retrain the model.

- Synthcity – A synthetic data generator enabling testing without real personal data—particularly useful for load tests and security validation.

Summary

One of the biggest challenges in designing AI infrastructure is protecting it against threats we haven’t even discovered yet. AI systems—especially large language models—can develop unexpected emergent capabilities. That’s why it’s critical to build environments for AI projects consciously and predictably.

Cloud for AI, developed by OChK experts, helps you stay ahead of next-generation risks like prompt injection and jailbreaking—and adapt to those that haven’t even surfaced.

Now you know how we designed Cloud for AI’s security architecture to guard against cyber threats and meet the demands of the AI Act and GDPR. In our next article, we’ll share how we tackle AI’s other unique challenges—unpredictable workloads, the need for instant scaling, constant model and library updates, sub-millisecond latency requirements, and full control over your data and models.

Want to dive deeper into Cloud for AI? Get in touch with us.