AI-powered solutions, including Machine Learning models, have become an integral part of almost every business today. While the days of AI being the shiny, awe-inspiring "unicorn" are largely behind us—at least when it comes to the business value of these technologies—the inner workings of AI algorithms and their final outputs are still far from black and white. The drive for quick business wins, paired with the lingering uncertainty over whether those wins are actually achievable, leads many organizations to take a cautious approach. Before committing to a full-scale rollout of a new solution, they often opt for a hands-on validation phase. This is where two key concepts come into play: PoC (Proof of Concept) and MVP (Minimum Viable Product). In this article, you'll learn how PoC and MVP fit into Machine Learning implementations and what benefits they can bring to your business. We'll also walk you through real-world PoC and MVP projects delivered by the OChK team for clients across different industries.

Understanding the Difference Between MVP and PoC

Prototyping new Machine Learning solutions and testing different stages of product development through PoC and MVP allows your organization to unlock real business value while keeping investments lean and timelines short. While both terms are well-known and widely used across the tech industry, in the context of AI/ML projects they take on added significance due to the complexity, risk, and high costs often associated with these initiatives.

We also explored the topic of building effective Machine Learning projects in our article: How to Build Effective Machine Learning Solutions in 3 Steps?. Check it out if you're looking to fully tap into the potential of ML and want practical guidance for planning your ML deployment journey.

What Is a PoC (Proof of Concept)?

A PoC is the initial validation of a given idea—primarily on a technical level, but also as an early gauge of its business potential. Since a PoC is essentially an experiment rather than a product, its main goal is to test whether a particular technology approach meets user expectations and provides a solid foundation for further product development.

Typical characteristics of an AI/ML PoC include working with a limited dataset, focusing on a core technological hypothesis, and operating within a short timeframe—days or weeks rather than months or years.

What Is an MVP (Minimum Viable Product)?

An MVP, on the other hand, is the minimal version of a product that already includes key functionalities, works with real-world data, and delivers tangible business value to end users. While it may still be simplified compared to the final version—missing secondary features or targeting only a specific user segment—it allows teams to quickly gather user feedback and validate whether the product meets their needs.

Ultimately, an MVP helps teams adjust course as needed and gradually evolve the solution into a fully mature product.

When to Choose PoC vs. MVP?

Deciding whether to start with a PoC or move directly to an MVP largely depends on your level of technological and business certainty.

| PoC | MVP |

| When there are significant doubts about whether AI/ML can solve the problem. | When the technology has already been validated (e.g., via a PoC or existing research). |

| When technological risk is high (e.g., lack of data, uncertain outcomes). | When the key risk lies in market or user acceptance rather than technology itself. |

| When the cost of full system deployment would be unjustifiable without initial proof of success. | When the goal is to launch quickly with a minimal product and improve it through iterations. |

| When internal buy-in needs to be secured (e.g., for project funding). | When the organization is ready to adopt the technology (people, processes, systems are prepared). |

In practice, AI/ML projects often begin with a PoC and, if successful, naturally progress to the MVP stage.

Validating Ideas and Technologies: Real-Life Examples of PoC and MVP in Action

At OChK, we frequently work with clients following this very model—using PoC and MVP as key tools for validating AI/ML use cases before scaling them. Below, you'll find three real-world examples of business projects delivered as PoC or MVP. They might just inspire you to explore similar AI/ML opportunities within your own organization.

1. MVP: Vision AI for an Industrial Sector Company

10 Weeks to Production Phase

Our client wanted to automate the material handling process on their production lines—ensuring that materials were properly categorized with minimal human intervention. To achieve this, cameras were installed above the production lines. Leveraging Vision AI models, these cameras captured images of the transported materials, classified them by type, and, after verifying predictions, properly categorized them.

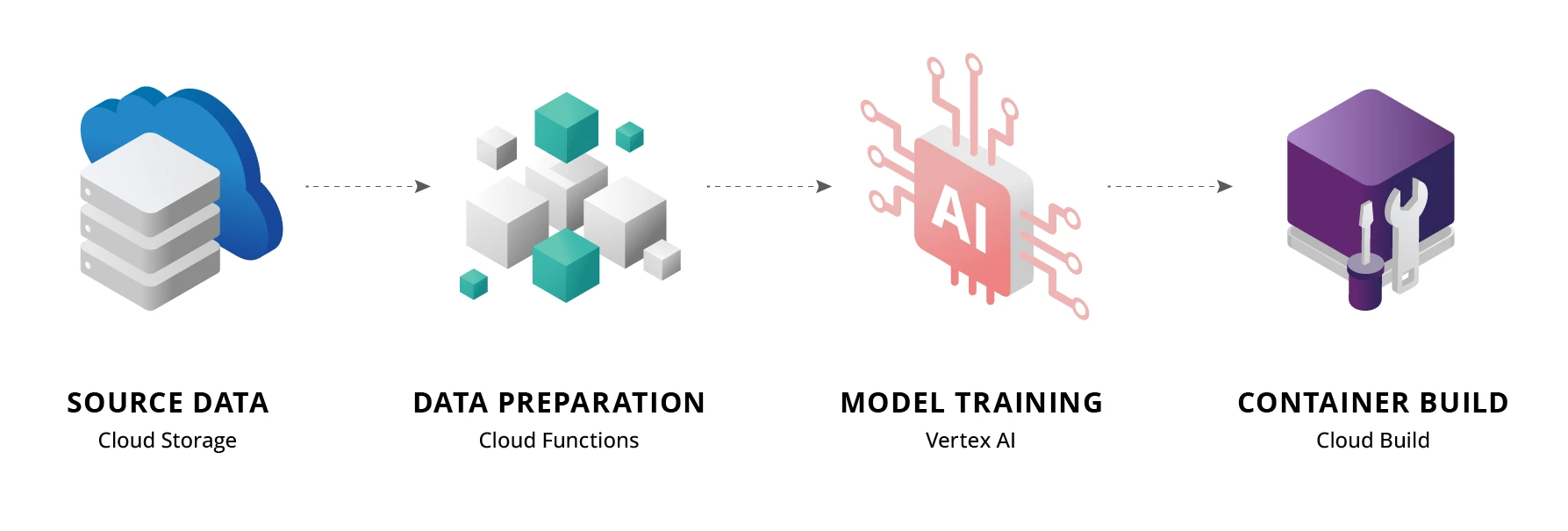

Fig. 1: How the solution worked

We started by preprocessing the source data—camera images and metadata files containing details about the production line and visible products. This included standardizing image quality, applying rotations, zoom-ins, and zoom-outs, and then preparing training datasets for the model.

Using a multiclass classification model, we trained the system to recognize several dozen product categories from the images. The model achieved over 90% accuracy when tested on previously unseen data.

The exercise covered several production lines, with the trained models being rolled out progressively into production. The entire process—from data preparation to a working production-grade model—was completed in under 10 weeks.

An interesting twist? Although the project utilized cloud technologies throughout the development phase, the final solution was delivered as a containerized on-premises application, fully aligned with the client's internal infrastructure and security requirements.

Pro tip: When working on AI/ML projects—especially during PoC or MVP phases—never assume that the model "sees" your data the same way a human does. Vision AI algorithms can pick up patterns that seem irrelevant or even invisible to domain experts. In our case, the model mistakenly learned to identify one product category not by the object itself, but by the background that consistently appeared in images from a specific camera angle. This led to hard-to-explain misclassifications. Always scrutinize your training data for hidden biases and test your model across varied data sources before moving to production.

2. MVP: Predictive Anti-Churn Model for an E-Commerce Company

6 Weeks to Production Phase

This project marked one of the client's first forays into applying Machine Learning to their core business operations. The goal was ambitious: to proactively identify customers at risk of churning and take timely action to prevent it.

While building the actual model—a standard supervised learning classification task (predicting a 0/1 churn flag)—was relatively straightforward, the real challenge lay elsewhere: designing the right offer and running an effective retention campaign proved to be the trickier part.

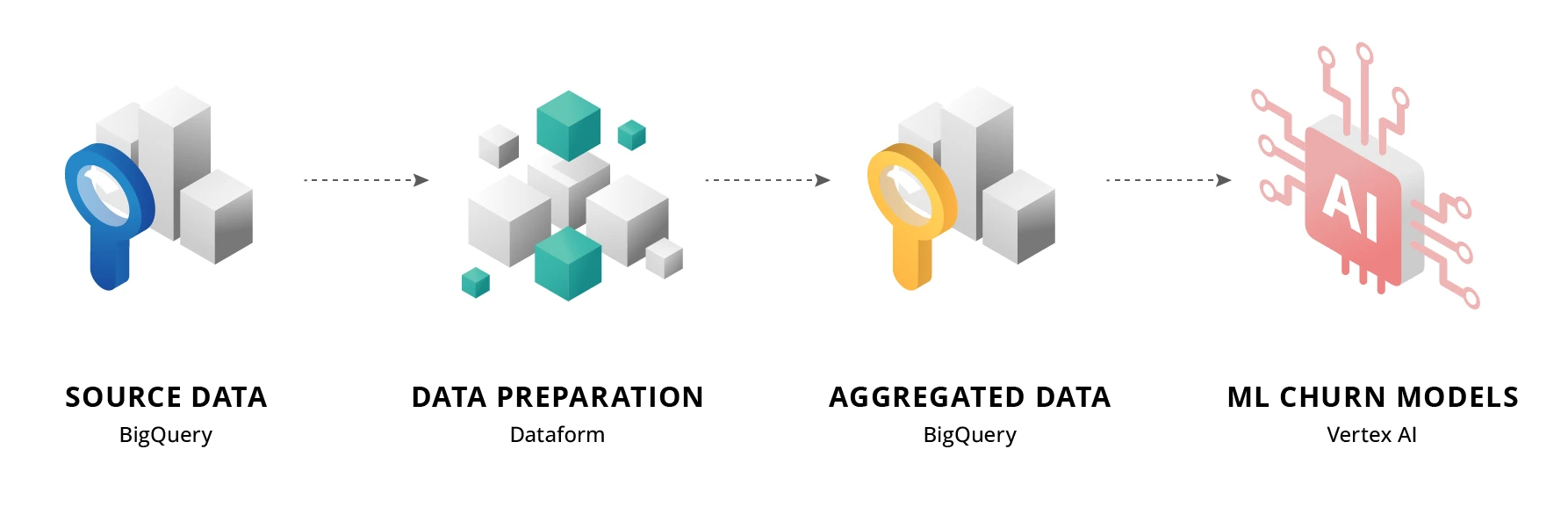

Fig. 2: How the solution worked

Unlike previous projects that relied on visual data, this time the focus was on structured data—customer attributes stored in relational databases. To maximize the predictive power of these variables, the data was aggregated wherever possible, using sums, maximum values, or multi-period trends (across several time horizons). This approach enabled the models to analyze both short-term and long-term behavior patterns.

The result? The models could identify customers at risk of churning with up to several times higher precision than traditional methods. The key metric for success was maximizing the capture of true churners while minimizing the overall number of customers targeted by the retention campaign.

Pro tip: In the race to deploy advanced algorithms and load your models with as many engineered features as possible, it's easy to overlook the basics. Before you spend hours fine-tuning your models, double-check for data leakage—a common but costly pitfall. In this case, the client team initially reported surprisingly high model accuracy on both training and validation datasets... only to discover that one of the input attributes was indirectly leaking information about the target variable.

3. PoC: AI-Tagged Video Library for a Telecom Company

3 Weeks of Preparation; Project Remained at Concept Stage

The client's main objective was to find an AI-based solution that would streamline the process of searching through their vast internal video archives and enrich them with metadata such as timestamp, topic, or speaker.

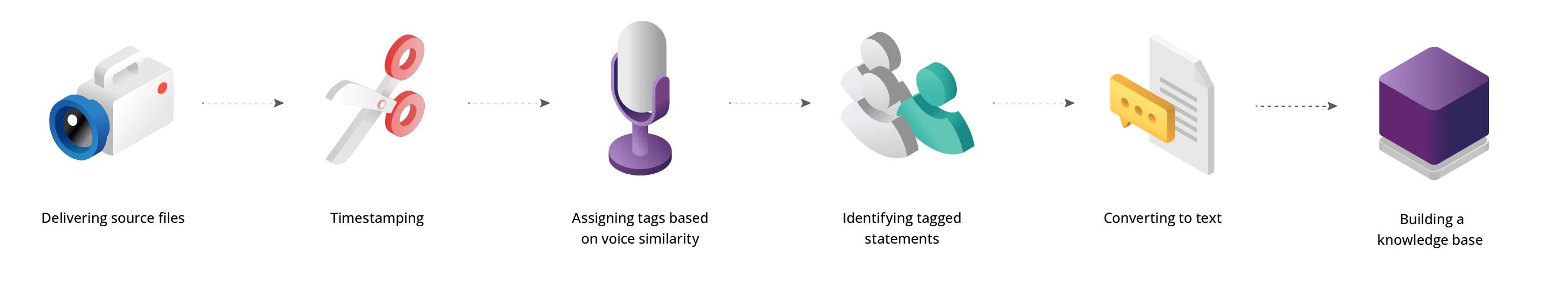

The source data consisted of the client's existing video recordings and a set of pre-labeled voice samples. Selected video segments were processed by an initial module that detected the start and end of each utterance and identified different speakers—not by name, but by assigning generic tags like "SPEAKER01" based on voice similarity.

These extracted speech segments were then compared against the pre-registered voiceprints of named individuals. If the similarity score crossed a defined threshold, the system attributed the segment to a specific person. All identified statements were indexed into a searchable knowledge base, enabling fast and efficient filtering by speaker, topic, or speech duration.

Fig. 3: How the solution worked

Although the PoC produced satisfactory technical results, the solution never made it beyond the concept stage.

Pro-tips:

- Not every PoC turns into a production phase—and that's perfectly normal. Sometimes the reason is a gap between the prototype's capabilities and the project's requirements. But in this case, the reason was much simpler: the client shifted priorities and decided not to invest further in the solution's development. This is actually a common and natural outcome in the AI project lifecycle.

- Even when using reliable, off-the-shelf libraries for tasks like speech segmentation or speaker recognition, don't fall into the trap of assuming AI will "magically handle it all." In this project, we often faced situations where the system worked well in most cases—but occasional misclassifications severely impacted the perceived quality of the overall solution. We repeatedly found ourselves asking: why was this segment split, but not that one? Why was this speech attributed to Speaker A and not Speaker B? Worse still, such errors were often hard to debug, with no clear cause. While there are ways to improve accuracy—like audio standardization, hyperparameter tuning, or noise reduction—it's important to recognize that moving from "good enough" to "excellent" quality can require disproportionately more effort.

Key Benefits of PoC and MVP

As the examples above demonstrate, using PoC (Proof of Concept) and MVP (Minimum Viable Product) to test AI technologies brings numerous tangible benefits.

Reducing Investment Risk

One of the primary advantages of the PoC and MVP approach is the low upfront investment. This means that even if you decide to abandon the project—or it fails to meet your expectations, whether technical or business—the incurred losses are significantly lower than if you had built the full-fledged product from the start. PoCs and MVPs allow you to test innovative ideas in a controlled, low-risk environment, continuously evaluating their potential and making informed decisions about whether to scale, iterate, or pivot—without committing all your organizational resources upfront.

Accelerating Idea Validation

PoCs and MVPs also significantly shorten the time from concept to business validation. A full-scale overhaul of components like a Data Platform or Analytics Platform could easily take quarters—or even years—especially when it involves migrating historical data or integrating multiple data sources from different systems. In contrast, with a well-scoped PoC or MVP, you can often go from idea to tangible outcomes within weeks or a few months, as shown in the projects above.

Efficient Use of Resources

Both PoCs and MVPs enable teams to act fast and innovate, without waiting for all formal project phases or the complete analytical infrastructure to be in place. This doesn't mean you can ignore data governance, Feature Engineering, or MLOps entirely—but at the PoC/MVP stage, you can focus on the essential components needed to test your core hypothesis, applying pragmatic shortcuts, and leaving the full complexity for later stages.

For example, you don't need data from every system or their full historical depth—selected sources and shorter timeframes often suffice. Instead of a sophisticated Feature Engineering platform with hundreds of aggregation types, you might use a simplified version supporting just a dozen standardized aggregations. Similarly, you can postpone full MLOps automation—at a small scale, manual model lifecycle management can still be effective.

Summary

We all want to deliver projects faster, better, and cheaper, especially those leveraging Machine Learning to address market needs and build competitive advantage. PoC and MVP approaches provide a proven, low-risk alternative to long-term, capital-intensive investments, allowing you to test and validate new solutions quickly.

Metaphorically speaking, they serve as the first filter to distinguish your "small fish" from the "big fish" in terms of business value. While PoC focuses on validating the technological feasibility of your idea, MVP allows you to develop a basic, working version of the product. Both approaches enable fast deployment and iterative improvements based on real-world feedback.

The examples of AI/ML projects delivered using PoC and MVP models clearly show the many benefits of this approach—including reduced investment risk, resource efficiency, and the agility to align your solutions with market needs.

If you'd like to learn more about the solutions we build, visit our Data/AI page, or if you already have an idea and would like support from our experts, get in touch using the form below.